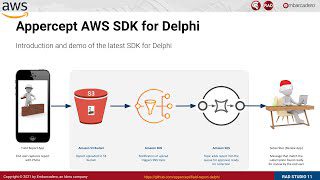

Let’s take a look at this image below. Let’s try to think about 2 or 3 objects we can see and focus on what calls our attention the most. If we write them down, let’s see if Google can guess it right. You can use Windows Tools for developers like RAD Studio Delphi to control the Google Cloud Vision API

Table of Contents

Google’s cloud-based vision API – making sense of what we see and much more

Google Cloud’s Vision API offers powerful pre-trained machine learning models that you can easily use on your desktop and mobile applications through REST or RPC API methods calls. Let’s say you want your application to detect objects, locations, activities, animal species, and products. Or maybe you want not only to detect faces but also their emotion expressed on the faces. Or perhaps you have the need to read printed or handwritten text. All of this and much more is possible to be done for free (up to first 1000 units/month per feature) or at very affordable prices and it’s scalable too with no upfront commitments.

Object localization

The option for “Object Localization” is part of the Vision API that we can use to detect and extract information about multiple objects in an image. For each object detected the following elements are returned:

- A textual description – what is it, in plain human language?

- A confidence score – how certain is the API of what it has detected?

- And normalized vertices [0,1] for the bounding polygon around the object. Where are the objects on the image?

Using RAD Studio Delphi to control the Google Cloud Vision API

We can use RAD Studio and Delphi to easily setup its REST client library to take advantage of Google Cloud’s Vision API to empower our desktop and mobile applications and if the request is successful, the server returns a 200 OK HTTP status code and the response in JSON format.

Our RAD Studio and Delphi applications will be able to either call the API and perform the detection on a local image file by sending the contents of the image file as a base64 encoded string in the body of the request or rather use an image file located in Google Cloud Storage or on the Web without the need to send the contents of the image file in the body of your request.

How do I set up the Google Cloud Vision Object Localization API?

Make sure you refer to Google Cloud Vision API documentation in the Object Localization section (https://cloud.google.com/vision/docs/object-localizer), but in general terms this is what you need to do on Google’s side:

- Visit https://cloud.google.com/vision and login with your Gmail account

- Create or select a Google Cloud Platform (GCP) project

- Enable the Vision API for that project

- Enable the Billing for that project

- Create a API Key credential

How do I call Google Vision API Object Localization endpoint?

Now all we need to do is to call the API URL via a HTTP POST method passing the request JSON body with type OBJECT_LOCALIZATION and source as the link to the image we want to analyze. One can do that using REST Client libraries available on several programming languages and a quick start guide is available on Google’s documentation (https://cloud.google.com/vision/docs/quickstart-client-libraries).

Actually, at the bottom page of the Google Cloud Vision documentation Guide (https://cloud.google.com/vision/docs/object-localizer) there is an option “Try This API” which allows you to post the JSON request body as shown below and get the JSON response as follows.

|

1 |

POST https://vision.googleapis.com/v1/images:annotate |

|

1 2 3 4 5 6 7 8 9 10 11 12 |

{ "requests": [ { "features": [ { "maxResults": 10, "type": "OBJECT_LOCALIZATION" }], "image": { "source": { "imageUri": "https://live.staticflickr.com/5615/15725189485_714bf64a55_b.jpg" }}}]} |

What does the Google Vision API Object Localization endpoint return?

After the call the result will be a list with a “Object” description, the confidence score (which ranges from 0-no confidence to 1-very high confidence), and a bounding polygon showing where in the image the object was found. You you can use the polygon information to draw a square on top of the image and highlight the objects so the final result would be something like shown in the image below.

For this image in specific I was expecting Google to return additional objects like Purse/Bag and Flip-flops/Shoes. But as far as I could see it looks like the API focuses on the most important and relevant objects in the complete scenario.

What is the difference between the Google Cloud vision label detection and object localization APIs?

in a previous article (https://blogs.embarcadero.com/easily-deploy-powerful-ai-vision-tools-on-windows-and-mobile/) we went through the Google’s Cloud Vision Label Detection feature and at this point it would be nice to check differences between them. The Label Detection feature is far more broad-ranging and can identify general objects, locations, activities, animal species, products, and more, but it gives no information bounding polygon localization information as result.

If we input this very same image into the Label Detection feature the result would be “Water, Sky, Umbrella, People on beach, Blue”. As you can see it included activities and colors and more general information about the thing we can find on the image. In some ways it’s a more complete description of the image.

The list of items will be limited on how many objects are detected up to the maximum configured in the parameter maxResults in the request JSON. It’s the perfect starting-point for creating a simple “spot the objects” game.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

{ "responses": [ { "localizedObjectAnnotations": [ { "mid": "/m/01g317", "name": "<strong>Person</strong>", "score": 0.8915688, "boundingPoly": { "normalizedVertices": [ { "x": 0.8579766, "y": 0.64332706 },{ "x": 0.9267996, "y": 0.64332706 },{ "x": 0.9267996, "y": 0.8971632 },{ "x": 0.8579766, "y": 0.8971632 }]}},{ "mid": "/m/0hnnb", "name": "<strong>Umbrella</strong>", "score": 0.7732811, "boundingPoly": { "normalizedVertices": [ { "x": 0.19065358, "y": 0.11280499 },{ "x": 0.8298022, "y": 0.11280499 },{ "x": 0.8298022, "y": 0.92724794 },{ "x": 0.19065358, "y": 0.92724794 }]}},{ "mid": "/m/01mzpv", "name": "<strong>Chair</strong>", "score": 0.7359347, "boundingPoly": { "normalizedVertices": [ { "x": 0.16712596, "y": 0.3217815 },{ "x": 0.4540786, "y": 0.3217815 },{ "x": 0.4540786, "y": 0.95641553 },{ "x": 0.16712596, "y": 0.95641553 }]}}]}]} |

How do I connect my applications to Google Cloud Vision Object Localization API?

Once you have followed basic steps to set up Object Localization API on Google’s side, make sure you go to the Console and in the Credentials menu item click on the “Create Credentials button” and add an API key. Copy this key as we will need it later.

RAD Studio makes it easy to call the Google Cloud Vision API with REST

RAD Studio Delphi and C++Builder make it very easy to connect to APIs as you can you REST Debugger to automatically create the REST components and paste them into your app.

In Delphi all the work is done using 3 components to make the API call. They are the TRESTClient, TRESTRequest, and TRESTResponse. Once you connect the REST Debugger successfully and copy and paste the components, you will notice that the API URL is set on the BaseURL of TRESTClient. On the TRESTRequest component you will see that the request type is set to rmPOST, the ContentType is set to ctAPPLICATION_JSON, and that it contains one request body for the POST.

Run RAD Studio Delphi and on the main menu click on Tools > REST Debbuger. Configure the REST Debugger as follows marking the content-type as application/json, and adding the POST url, the JSON request body and the API key you created. Once you click the “Send Request” button you should see the JSON response, just like we demonstrated above.

How do I build a Windows desktop or Android/iOS mobile device application using the Google Cloud Vision API Object Localization?

Now that you were able to successfully configure and test your API calls on the REST Debugger, just click the “Copy Components” button, go back to Delphi, and create a new application project. Now paste the components onto your main form.

Very simple code added to a TButton OnClick event to make sure every thing is configured correctly and it’s done! In around five minutes we have made our very first call to Google Vision API and we are able to receive JSON response for whatever images on which we want to perform Object Localization. Please note that on the TRESTResponse component the RootElement is set to ‘responses[0].localizedObjectAnnotations’. This means that the ‘localizedObjectAnnotations’ element in the JSON is specifically selected to be pulled into the in memory table (TFDMemTable).

Check out how these speech services by Google could be vital for the success of your business operations in this guide.

Example code showing how to call the Google Cloud Vision API in Delphi

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

procedure TForm1.Button1Click(Sender: TObject); var APIkey: String; begin FDMemTable1.Active := False; RESTClient1.ResetToDefaults; RESTClient1.Accept := 'application/json, text/plain; q=0.9, text/html;q=0.8,'; RESTClient1.AcceptCharset := 'UTF-8, *;q=0.8'; RESTClient1.BaseURL := 'https://vision.googleapis.com'; RESTClient1.HandleRedirects := True; RESTClient1.RaiseExceptionOn500 := False; //APIkey := 'put here your Google API key'; RESTRequest1.Resource := Format('v1/images:annotate?key=%s', [APIkey]); RESTRequest1.Client := RESTClient1; RESTRequest1.Response := RESTResponse1; RESTRequest1.SynchronizedEvents := False; RESTResponse1.ContentType := 'application/json'; RESTRequest1.Params[0].Value := Format( '{"requests": [{"features": [{"maxResults": %s,"type": "OBJECT_LOCALIZATION"}],"image": {'+ '"source": {"imageUri": "%s"}}}]}', [Edit2.text, Edit1.text]); RESTResponse1.RootElement := 'responses[0].localizedObjectAnnotations'; RESTRequest1.Execute; memo2.Lines := RESTResponse1.Headers; memo3.Lines.text := RESTResponse1.Content; end; |

The sample application features a TEdit as a place to paste in the link to the image you want to analyze and another TEdit for the maxResults parameter, a TMemo to display the JSON results of the REST API call, and a TStringGrid component to navigate and display the data in a tabular way. All this demonstrates how to easily integrate the JSON response result with a TFDMemTable component. When the button is clicked the image is analyzed and the application presents the response JSON as text and as data in a grid. Now you have every thing you need in order to integrate with the response data and make your application process the information the way it better suits your needs!

Summary of what we learned in this article

In this blog post we’ve seen how to sign up for the Google Cloud Vision API in order to perform Object Localization on images. We’ve seen how to use the RAD Studio REST Debugger to connect to the endpoint and copy that code into a real application. And finally we’ve seen how easy and fast it is to use RAD Studio Delphi to create a real Windows (and Linux and macOS and Android and iOS) application which connects to the Google Cloud Vision API, executes Object Localization image analysis and gives as result a memory dataset ready for you to iterate!

You can download the full example code for the desktop and mobile Google Cloud Vision API Object Localization REST demo here: https://github.com/checkdigits/DelphiGoogleObjectLocalizationAPI_example

Are you ready to make your programs understand real world images? It’s easy with RAD Studio Delphi!

Design. Code. Compile. Deploy.

Start Free Trial Upgrade Today

Free Delphi Community Edition Free C++Builder Community Edition

Excellent, Delphi is for machine learning. It is really cool cause machine learning is technology on this era